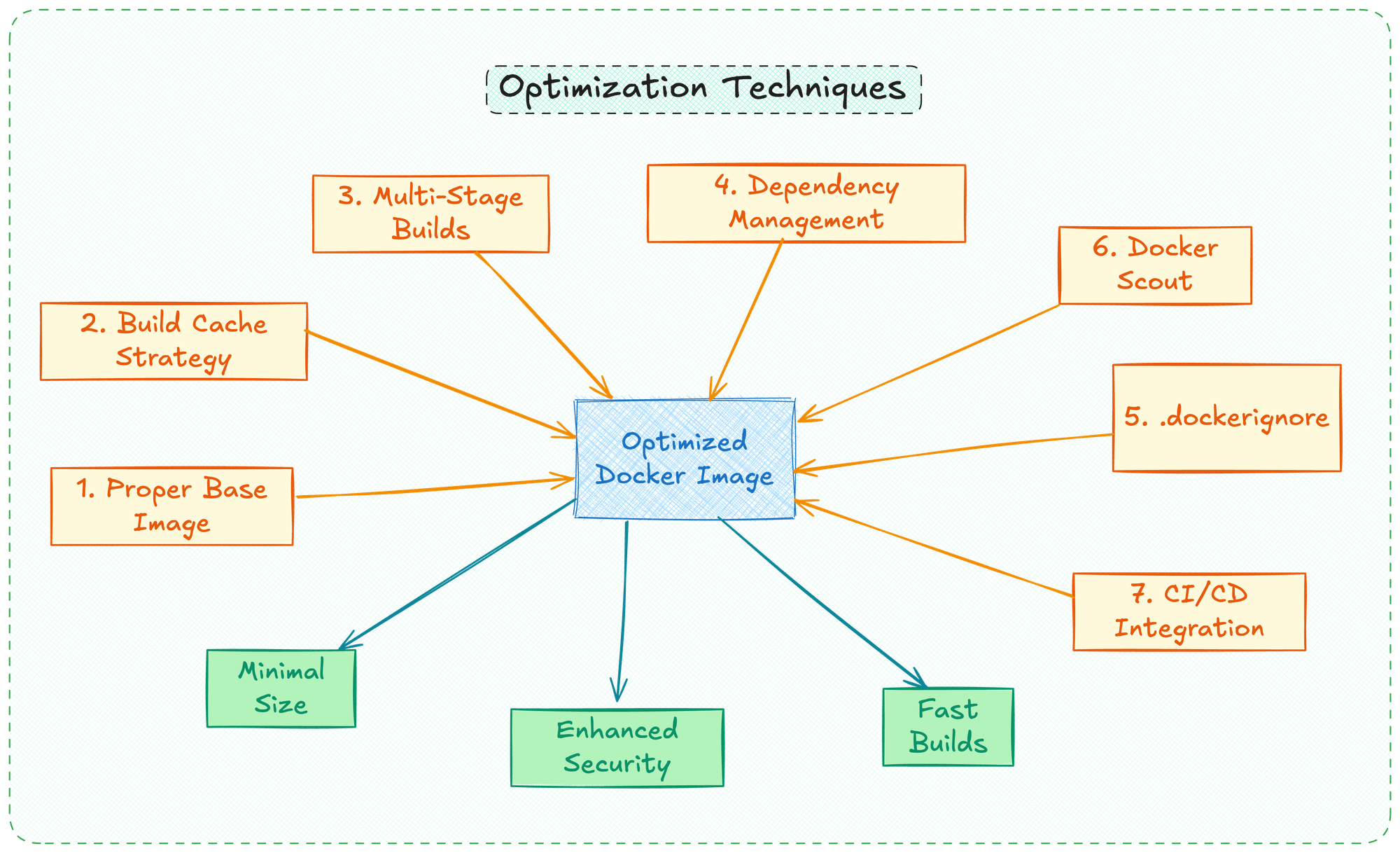

You Don't Know It, But You're Building Your Docker Images Incorrectly

If you're using Docker, chances are you've built some container images. But are you following the best practices? Many developers unknowingly create inefficient, insecure, or bloated Docker images. Let's explore how to build them the right way, with concrete examples showing how to reduce image sizes by up to 97%.

1. You're using the Wrong Base Image

One of the most common mistakes is choosing an inappropriate base image. While ubuntu:latest might seem like a safe choice, it's often overkill for most applications.

What you should do instead:

- Use official Docker images whenever possible - they're secure and well-maintained

- Choose minimal base images like Alpine Linux (under 6MB!) when appropriate

- Consider using distroless images for production deployments (as small as 1-8MB)

- Use specific version tags instead of

latestto ensure reproducible builds

For example, switching from node:latest to node:alpine can reduce your base image size from over 1GB to around 240MB.

2. Your build cache is working against "You"

Docker's build cache is powerful, but misunderstanding how it works can lead to slower builds and larger images. Here's a real example showing proper cache usage:

# Bad Example - Invalidates cache on any code change

COPY . /app

RUN npm install

# Good Example - Leverages cache for dependencies

COPY package*.json /app/

RUN npm install

COPY . /app

According to Docker's documentation, cache invalidation occurs when:

- Any changes to a

RUNinstruction's command - Any changes to files copied via

COPYorADD - Any cache invalidation in previous layers

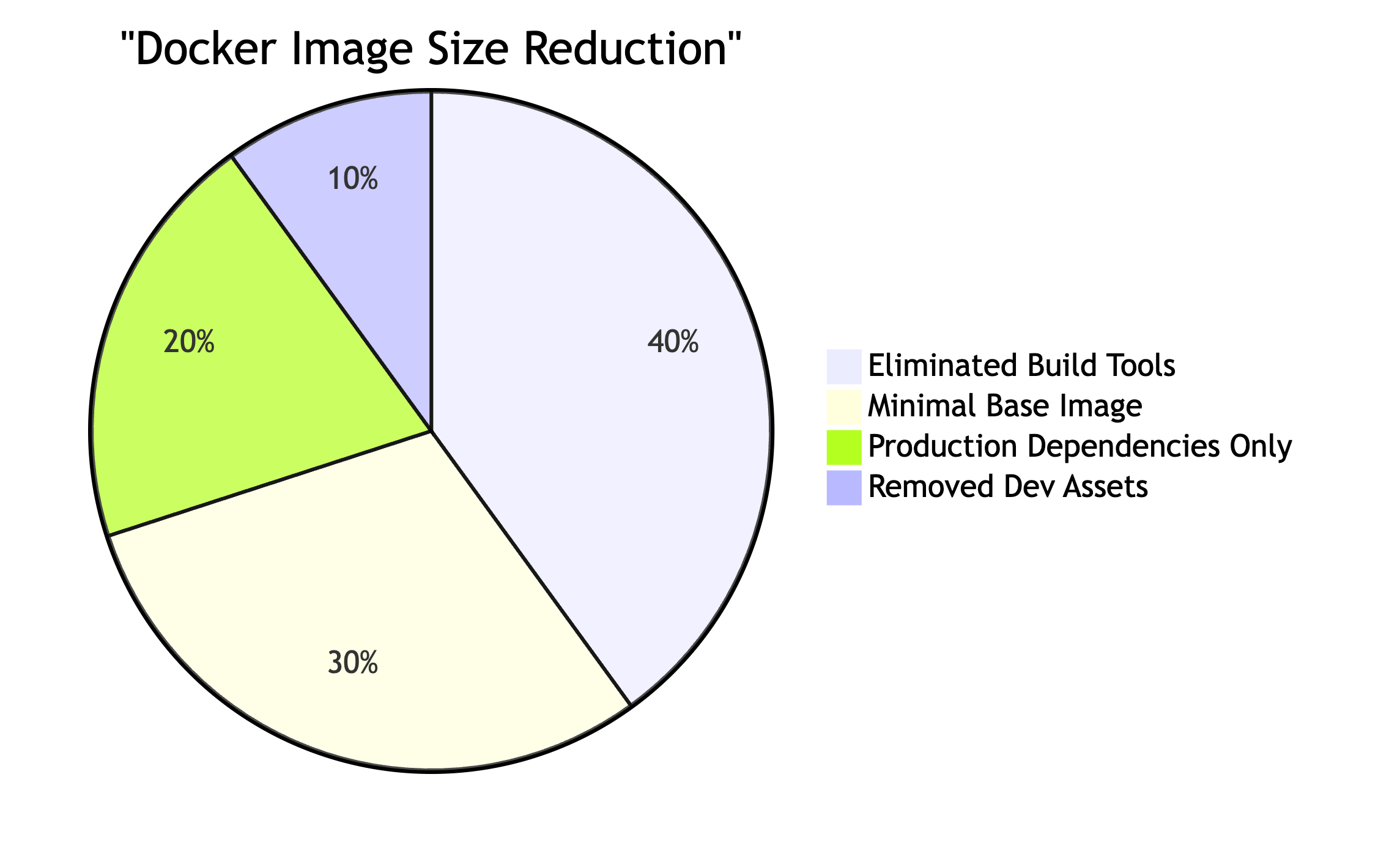

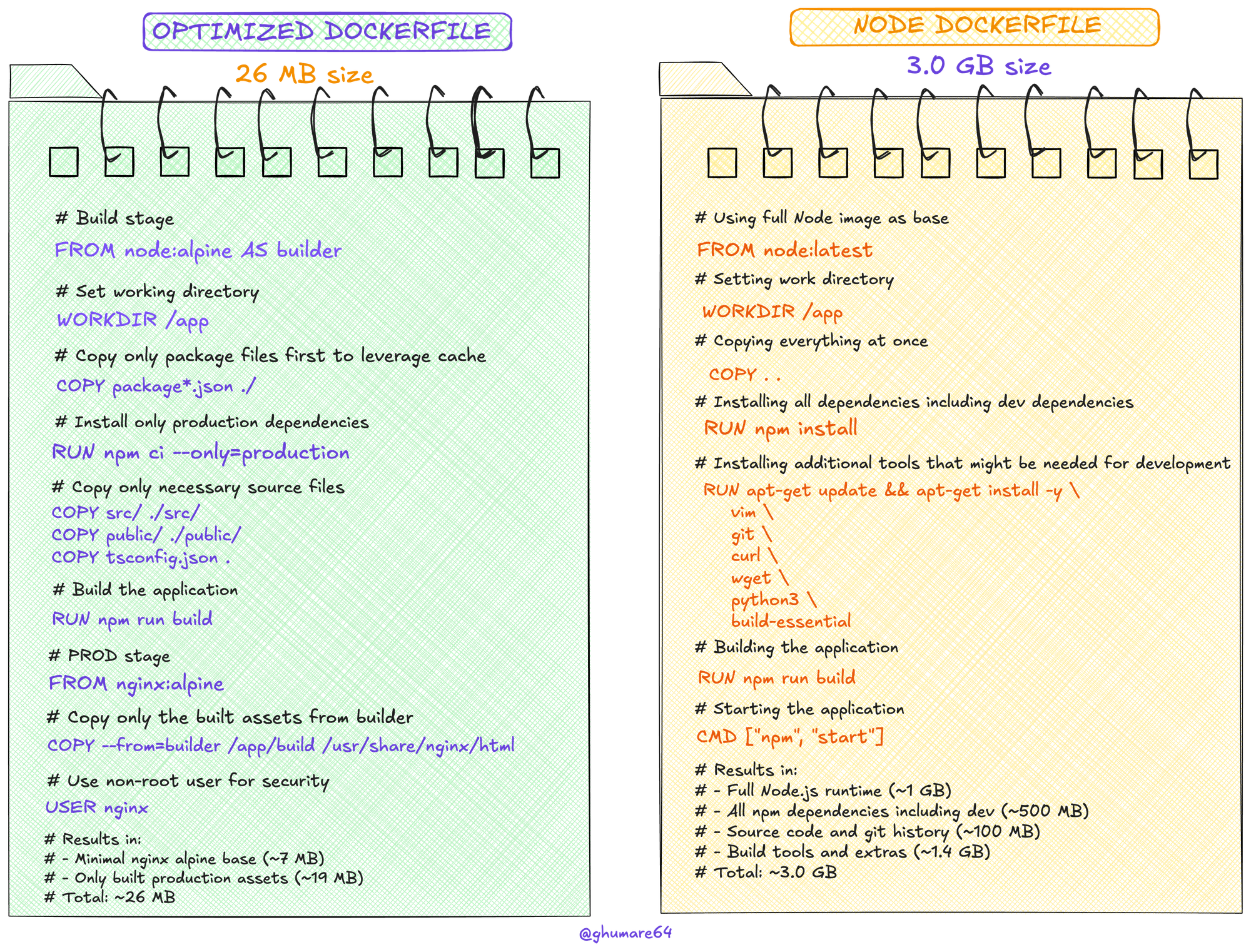

3. Multi-Stage Builds for Dramatic Size Reduction

Here's a real-world example showing how multi-stage builds can reduce a Node.js application from 3.0GB to just 26.1MB:

# Build stage

FROM node:alpine AS builder

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Production stage

FROM nginx:alpine

COPY --from=builder /app/build /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

This approach:

- Separates build dependencies from runtime

- Only copies the necessary production artifacts

- Uses a minimal nginx base image (around 40-43MB)

4. Separate development and Production dependencies

A key strategy for reducing image size is properly separating your dependencies. In your package.json:

{

"dependencies": {

// Production dependencies only

},

"devDependencies": {

// Development/build dependencies

}

}

Then in your Dockerfile, use:

RUN npm install --production

This ensures only necessary production dependencies are included in your final image.

5. Use .dockerignore Effectively

Create a proper .dockerignore file to exclude unnecessary files:

node_modules

npm-debug.log

Dockerfile

.dockerignore

.git

.gitignore

README.md

This prevents unnecessary files from being included in your build context, which can:

- Speed up builds

- Reduce image size

- Prevent sensitive information leaks

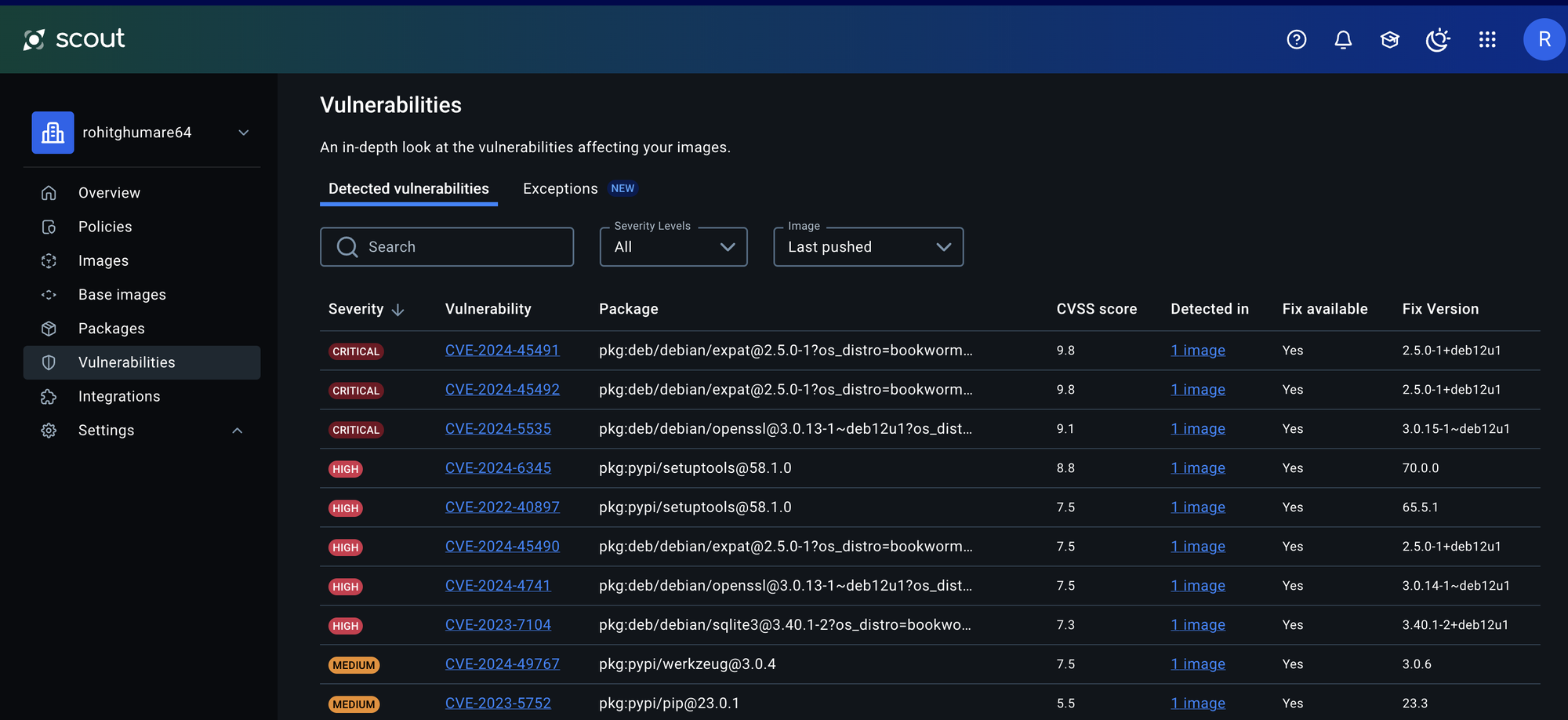

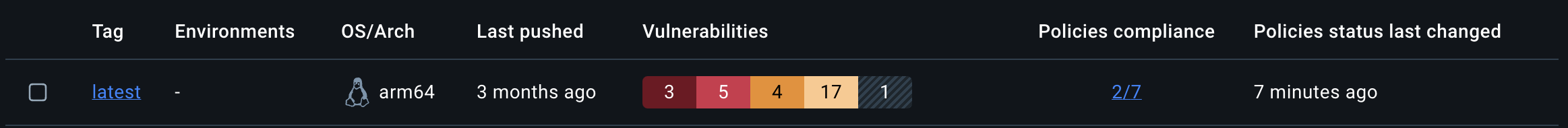

6. Leverage Docker Scout for Security

Docker Scout is a powerful tool for analyzing your images for vulnerabilities. According to Docker's documentation, it can:

- Identify security risks within your images before deployment

- Provide remediation suggestions for vulnerabilities

- Evaluate security standards compliance

- Integrate with your CI/CD pipeline

7. Build in CI/CD

Use automated builds in your CI/CD pipeline to ensure consistency and reproducibility. For example:

build:

steps:

- name: Build and test

run: |

docker build -t myapp:${{ github.sha }} .

docker scout cves myapp:${{ github.sha }}

Real-World Results

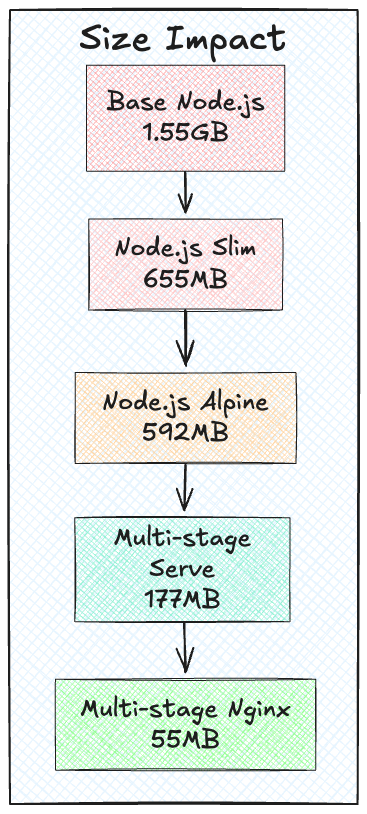

Here's a comparison of image sizes using different optimization techniques:

| Approach | Image Size |

|---|---|

| Base Node.js | 1.55GB |

| Node.js Slim | 655MB |

| Node.js Alpine | 592MB |

| Multi-stage with Serve | 177MB |

| Multi-stage with Nginx | 55MB |

That's a 97% reduction in image size from the initial build!

Conclusion

Building efficient Docker images isn't just about making them work - it's about making them work efficiently and securely. By following these practices, you can:

- Reduce image sizes by up to 97%

- Improve build times

- Enhance security

- Reduce deployment costs

- Improve application performance

Remember: The goal isn't just to create a working container image, but to create one that's efficient, secure, and maintainable.

What is the best way to use Docker for your Kubernetes workloads? Try Taikun CloudWorks today. Book your free demo today, and let our team simplify, enhance, and streamline your infrastructure management.