As more and more applications became cloud-native, containers became the ubiquitous way to bring flexibility and scalability to the system. As applications gained more and more functionality, it became essential to have an automated system for container management.

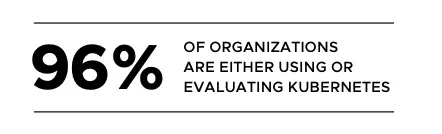

A system that creates, manages, and destroys containers as the traffic requirements change. This is called Container Orchestration. Kubernetes is the leading container orchestration tool in the cloud infrastructure today. It gives a level of abstraction over containers on a cloud infrastructure and groups them into logical units for easier management and discovery.

For us to understand why Kubernetes is so ubiquitous and why it is the preferred choice for most cloud-native containerized applications, we need to go back to when it all started.

In this blog, we will cover how Kubernetes came into being and why it is the future of cloud computing. You can read all our blogs on Kubernetes starting with this blog.

Birth of Kubernetes and CNCF

By 2014-15, Google had been running containerized applications for more than a decade. Internally, Google had a container-oriented cluster management system called the Borg Project. The project managed billions of containers within Google infrastructure and was leading to a more powerful cloud than just VMs and disks.

With Kubernetes, the team at Google saw massive improvements in automated deployments, scaling, and management of containerized applications. But that needs wider industry acceptance to unfold the massive value further. It became clear that open-sourcing the project was the only way to achieve the massive potential. And that is how Kubernetes was born.

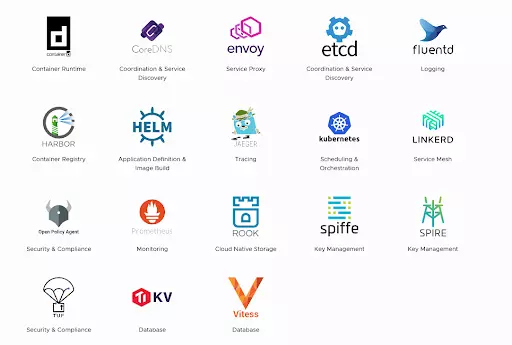

In 2015, Google cloud also helped form a vendor-neutral foundation named Cloud Native Computing Foundation (CNCF) to have greater industry-wide collaborations and accelerate the growth of open-source cloud computing projects. Over the years, CNCF has become a key driver of cloud computing’s growth story. Many ubiquitous open-source projects like Prometheus, Envoy, and of course, Kubernetes are part of CNCF.

CNCF-certified Kubernetes system is the industry standard for a secure and stable version of Kubernetes. It is the most preferred version of Kubernetes. CNCF is part of the non-profit Linux Foundation. You can view all the CNCF projects here.

How Kubernetes changed the world of cloud computing

Before Kubernetes, most of the container management systems in the cloud were proprietary. For example, AWS would have its own way of doing container management. This meant whenever companies made a choice to move to the cloud, the choice of cloud was a big decision.

Since container management was proprietary to the cloud service, the application was locked into that cloud once it moved. The companies could not simply move to a different cloud easily. The vendor lock-in gave cloud services extraordinary leverage to charge more and gather long-term commitments from customers.

Since the cost of moving between cloud service providers was very high, it slowed down the rate of transition to the cloud. Companies had to think much harder before making a move to the cloud.

Kubernetes changed all that.

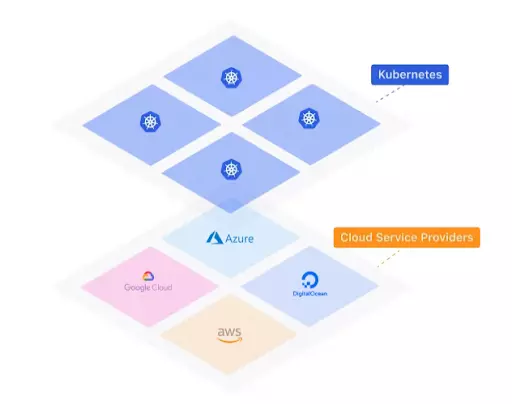

Kubernetes created a layer of abstraction over the cloud infrastructure. This allowed containerized applications to work with Kubernetes instead of proprietary container management technologies.

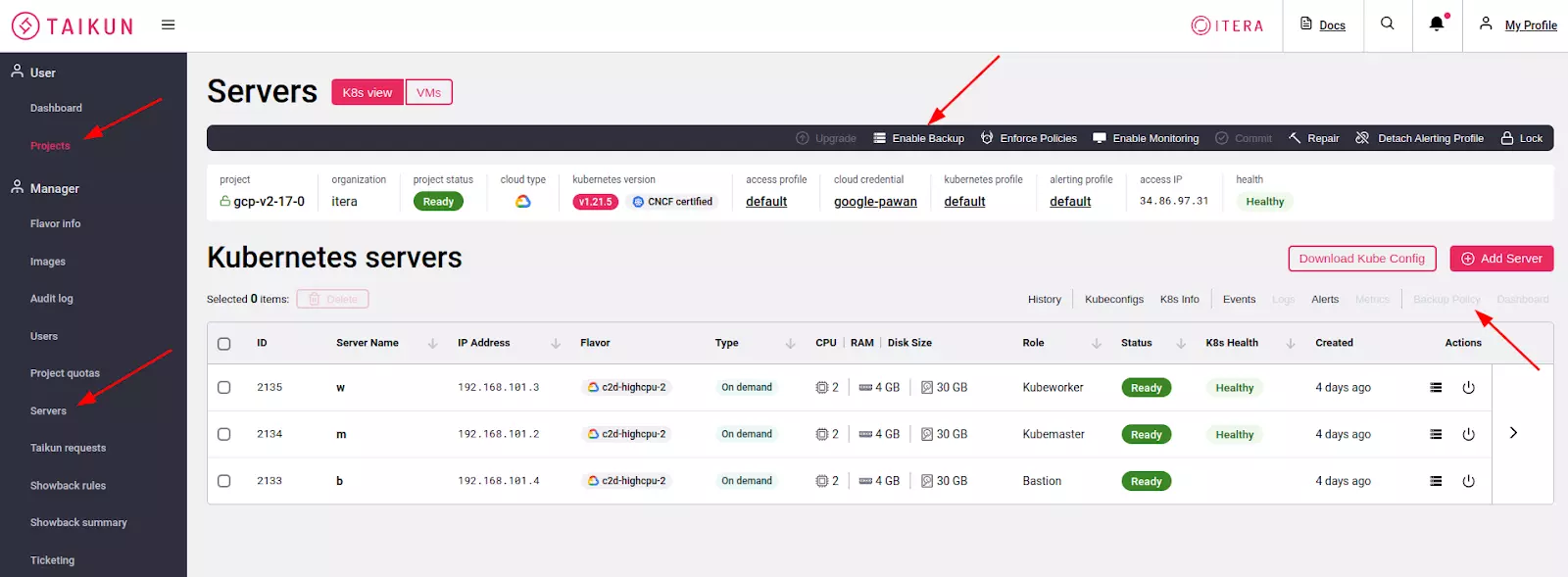

Kubernetes being open source, enabled many developers to create tools to manage and monitor container infrastructure better. For example, Taikun is a Kubernetes management tool that allows you to have a central dashboard to manage container deployments across multiple cloud service platforms.

Kubernetes-offering by cloud providers became a popular choice by companies not only because of its portability but also its efficiency in container management. Every major cloud service provider had a Kubernetes offering. AWS has Elastic Kubernetes Service (EKS), which is a managed Container-as-a-Service. Google Cloud offers Google Kubernetes Engine (GKE) as their Kubernetes offering, while Microsoft Azure has Azure Kubernetes Services (AKS) as theirs.

Why the world uses Kubernetes

K8 platforms bring many benefits to containerized, cloud-native applications. Here are some of the key benefits:

Faster time to market

With Kubernetes providing a level of abstraction, it became easier for developers to program applications over containers without trying to do active container management. Kubernetes would automatically configure, scale up or scale down the underlying container infrastructure as the need arises.

This helped companies provide faster time-to-market development cycles for applications.

Better cost optimization

With automatic container management, the container infrastructure was more optimally utilized for the application. This reduces the cloud infrastructure cost for the company with more efficient development practices.

Tools like Taikun have taken it a step further by providing capabilities to budget team spends on Kubernetes deployments. You can read more about it here.

Improved scalability

The automatic scaling capability of Kubernetes has improved application performance across all cloud platforms. Kubernetes can automatically create more pods (via ReplicaSets) that allow more containers to be deployed for a functionality if the demand surges at any point.

Multi-cloud infrastructure

With Kubernetes, container management was standardized over multiple cloud providers. This helped companies run the same application on different platforms without changing much code.

The ability to host the same application on multiple clouds also made it more resilient and vendor-agnostic. This also enabled the rise of hybrid-cloud infrastructure.

Easy migration

Another result of Kubernetes-based infrastructure was that applications could easily be cloud-provider-agnostic. This removed the issue of vendor lock-in that plagued many early adopters of the cloud.

Greater resource efficiency

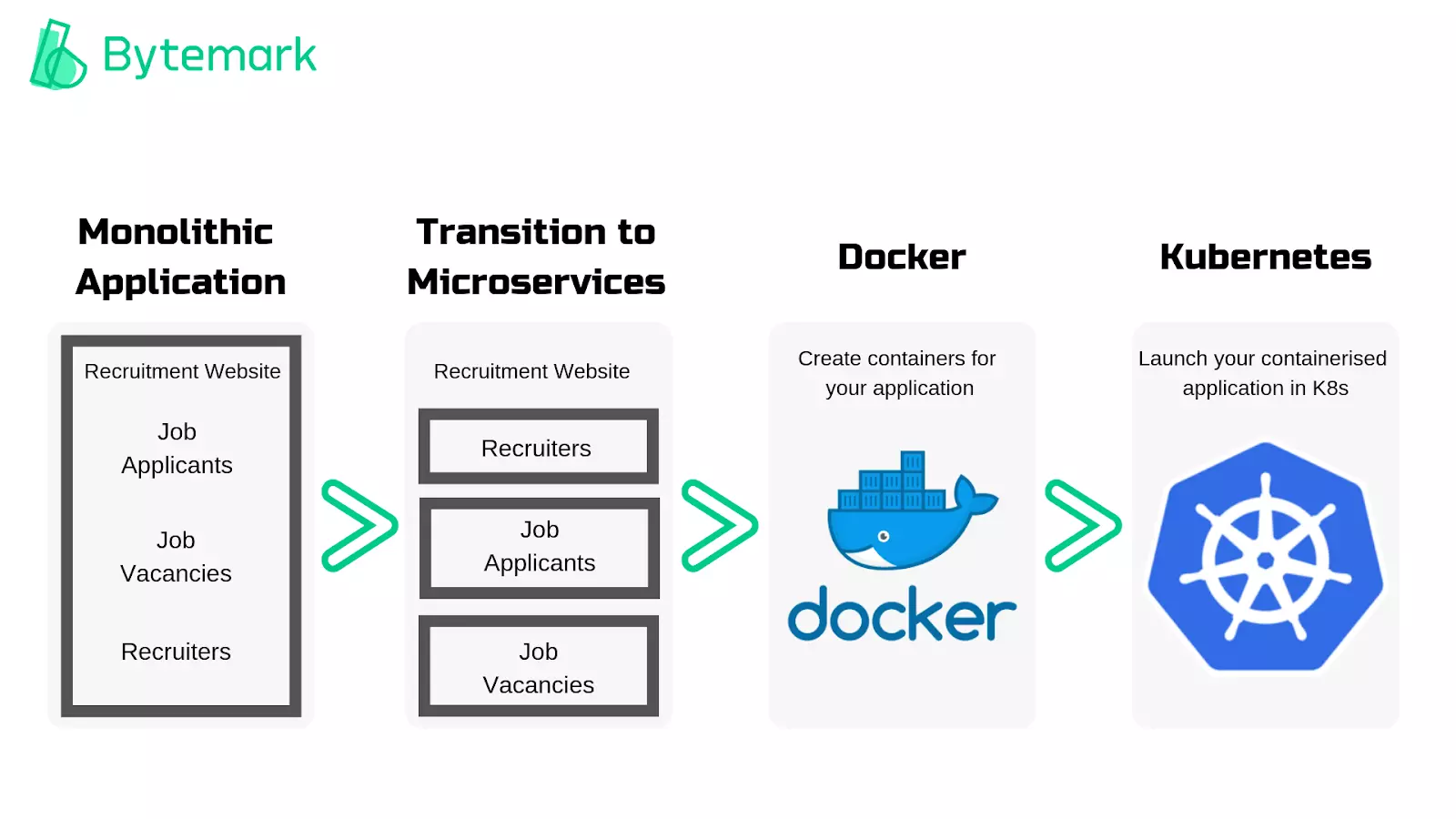

Containerization also made many applications move faster to a microservices-based architecture. This improved the overall efficiency of the system. Kubernetes further amplified the gains by automatic scaling of containers and more efficient resource allocation of compute resources.

Automated rollouts and rollbacks

Kubernetes also made it easy to roll out changes in the application. The automatic scaling and rollout made sure that the services continued running while an update of the earlier system took place.

Kubernetes has capabilities that allow traffic to slowly and progressively move to a newer version of the system. And if something goes wrong in the deployment, Kubernetes will automatically roll back the changes while servicing the incoming requests.

Horizontal scaling

Kubernetes also has manual scaling options that can be run with a simple command or a UI. Taikun is one of the tools that allow you to do it using a UI.

Self-healing

Kubernetes also has self-healing capabilities. In the event of the failure of any containers, K8s can restart them. This response can be triggered by not just failure but many other events like unresponsiveness or misconfigurations etc.

Faster development cycle

As Kubernetes triggered more microservices-based architecture, it improved the speed of development compared to monolithic system development. With microservices, different teams could focus on different services in parallel and independently. This change helped improve the overall system faster.

Future of Cloud Computing with Kubernetes

It is clear Kubernetes has revolutionized the past and the present of how cloud computing works. But what about the future? What will Kubernetes look like in the future? Let’s look at some of the promising future computing technologies with Kubernetes.

Micro VM Kubernetes

Cloud providers are now offering micro virtual machines in a Kubernetes cluster. This enhances security and workload isolation along with even greater resource efficiency.

One of the popular examples of Micro VM Kubernetes is AWS Firecracker.

Security improvements on Kubernetes

As Kubernetes becomes more ubiquitous, security threats to it have increased. Over time, there have been many security layers added to Kubernetes deployments.

Tools like Gatekeeper help define, audit, and enforce policies on Kubernetes resources. Frameworks like OPA Constraint Framework have been developed to validate requests to create and update Pods on Kubernetes clusters.

Being open-source, Kubernetes has a great community of contributors who keeps the project up-to-date to tackle latest security threats.

Kubernetes for edge and IoT

Usecases for edge computing and IoT applications are increasing at a rapid pace. For such cases, developers have created lightweight version of Kubernetes. Some of the popular examples of this are: K3s, MicroK8s and KubeEdge.

The lightweight version of Kubernetes are packaged into a single binary and is much smaller in size.

Taikun – Making Kubernetes easy

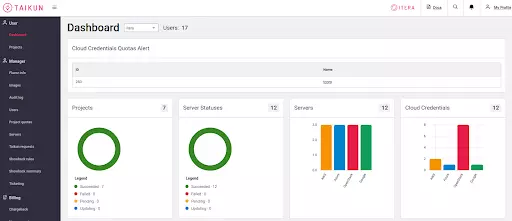

Taikun is a tool that allows you to setup a Kubernetes infrastructure across multiple clouds. It has a very intuitive dashboard that allows you to monitor and manage the entire infrastructure without having to having a team of cloud engineers.

Taikun works across private, public, and hybrid cloud infrastructure.

It not only automates your Kubernetes deployments but also provides excellent monitoring capabilities from a central dashboard. It can manage your deployments across all the major cloud providers like Google Cloud, Microsoft Azure, Amazon AWS, and RedHat Openshift.

Taikun makes Kubernetes easy. Make sure you give it a try.