Throughout our series on Kubernetes, we have covered a wide range of topics. Initially, we provided a detailed guide to help you get started with Kubernetes, as well as the details of Kubernetes architectures and why Kubernetes is a critical tool in any cloud infrastructure setup.

Furthermore, we explored various other essential Kubernetes concepts, such as namespaces, workloads, and deployment, providing a comprehensive understanding of these concepts. We also extensively discussed kubectl, a frequently used Kubernetes command.

In this blog, we’ll provide you with tips for managing high-traffic loads in Kubernetes. But first, let’s understand why managing high traffic loads is necessary.

Why is Managing High-Traffic Loads Necessary?

In today’s digital world, websites and applications are expected to handle a high traffic volume, especially during peak hours or promotional campaigns. When server resources become overwhelmed, it can lead to slower response times, decreased performance, and even complete service disruptions.

This results in a poor user experience and can lead to lost revenue, missed business opportunities, and damaged brand reputation. A report states 91% of companies report downtime costs exceeding $300,000 hourly. For instance, the Facebook outage cost Meta almost $100 million in revenue in 2021.

Therefore, effective management of high-traffic loads is critical to ensuring that websites and applications can handle the volume of traffic they receive without compromising on performance, availability, or security. This requires careful planning and execution of strategies such as scaling, load balancing, and caching, among others.

By successfully managing high traffic loads, businesses can ensure that their digital platforms can handle high traffic volumes without disruptions, providing a seamless user experience and ultimately contributing to their success.

How to Manage High-Traffic Loads in Kubernetes?

Managing high traffic loads in Kubernetes can be challenging, as it requires a deep understanding of Kubernetes architecture and deployment strategies.

Here, we will explore the tips for effectively managing high-traffic loads in Kubernetes.

- Scaling Kubernetes Deployment

Scaling Kubernetes deployments is crucial to managing high-traffic loads in Kubernetes. When traffic increases, additional resources such as CPU, memory, and storage may be required to handle the additional load. Kubernetes provides several options for scaling deployments to ensure that resources are allocated effectively and efficiently.

- Horizontal Pod Autoscaler: Kubernetes provides an auto-scaling feature, known as the Horizontal Pod Autoscaler (HPA), that automatically adjusts the number of replicas in a deployment based on traffic. The HPA monitors the resource utilization of the deployment and adjusts the number of replicas to ensure that the resource utilization remains within a set threshold. This helps to ensure that resources are allocated efficiently and effectively and that the deployment can handle high-traffic loads without downtime or performance issues.

- Vertical Autoscaler: Vertical Pod Autoscaler (VPA) can be used to manage high traffic in Kubernetes by dynamically adjusting the CPU and memory limits and requests of containers. However, VPA should be used in conjunction with other scaling strategies, such as Horizontal Pod Autoscaling (HPA) and Cluster Autoscaler, for more effective management of high-traffic scenarios.

- Cluster Autoscaler: The Cluster Autoscaler is a tool that automatically resizes a Kubernetes cluster based on resource demand. It can add nodes to the cluster when there are insufficient resources to run pods, and it can remove underutilized nodes that have been idle for an extended period of time, allowing their pods to be placed on other nodes.

Using these scaling methods, you can autoscale when traffic increases/decreases.

- Load Balancing

Load balancing in Kubernetes is used to distribute incoming network traffic across multiple servers or network resources to improve application performance, reliability, and availability. Doing this helps prevent any single server or resource from overloading with traffic.

Different load-balancing strategies can be used for managing external traffic to pods.

- Round Robin

- Kube-proxy L4 Round Robin Load Balancing

- L7 Round Robin Load Balancing

- Consistent Hashing/Ring Hash

- Fastest Response

- Fewest Servers

- Least Connections

- Resource-Based/Least Load

Depending on your need to distribute traffic across servers, you can choose any of these or a combination of Kubernetes load-balancing algorithms to manage traffic.

- Resource Allocation

Resource allocation can help manage high traffic in Kubernetes by ensuring that pods have sufficient resources (CPU, memory) to handle the increased traffic. Kubernetes allows you to set resource requests and limits for containers running in pods.

Resource requests specify the minimum amount of resources a container requires to run, while resource limits specify the maximum amount of resources a container can use. By setting appropriate resource requests and limits, Kubernetes can effectively allocate resources to pods and ensure enough resources are available to handle high-traffic scenarios.

- Ingress Controller

Kubernetes uses the concept of Ingress to define how traffic should flow from external sources to an application running inside the cluster. An Ingress resource provides a simple configuration for this purpose.

A central Ingress Controller, such as Nginx, can be installed in the Kubernetes cluster to manage all incoming traffic for multiple applications. The Ingress Controller acts as a gateway for external traffic and routes it to the appropriate application based on the rules defined in the Ingress resource.

The Ingress Controller can also provide features like SSL/TLS termination, load balancing, and name-based virtual hosting. It can work with different load balancer strategies and algorithms to distribute incoming traffic among multiple backend services.

- Traffic Control

Traffic control, referred to as traffic routing or shaping, is a tool to manage network traffic by controlling its flow and destination. In a production environment, it is essential to implement traffic control in Kubernetes to protect the infrastructure and applications from potential attacks and traffic surges.

Rate limiting and circuit breaking are two techniques that can be incorporated into the application development cycle to enhance traffic control. These techniques help to regulate the amount of traffic sent to an application and to prevent it from being overwhelmed by excessive traffic or failing due to network issues.

- Rate limiting: A high volume of HTTP requests, whether malicious or benign, can overload services and cause app crashes. Rate limiting restricts the number of requests a user can make in a given time period, such as GET or POST requests. During DDoS attacks, it can limit the incoming request rate to a typical value for real users.

- Circuit breaking: If a service is unavailable or experiences high latency, long timeouts for incoming requests can lead to a cascading failure, affecting other services and leading to the failure of the entire application. Circuit breakers prevent cascading failures by monitoring for service failures and returning error responses when a preset threshold is exceeded.

- Traffic Splitting

Traffic splitting is part of traffic control that controls the proportion of incoming traffic directed to different versions of a backend app running in an environment. It’s vital in app development as it allows teams to test new features and versions without negatively affecting customers. Useful deployment scenarios include blue-green deployments, debug routing and more. (Note that the definitions of these terms may vary across different industries.)

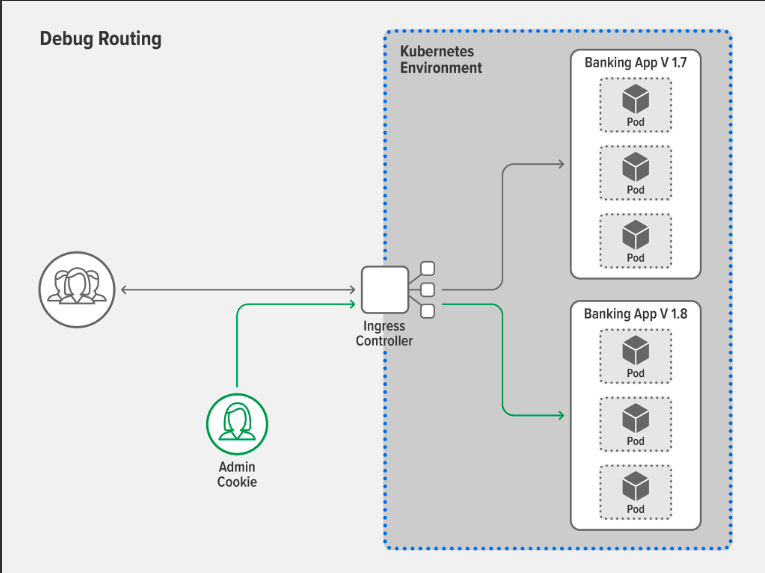

- Debug routing: If you want to test a new feature like a credit score, let’s assume, in your banking app before releasing it to customers, you can use debug routing. This allows you to deploy it publicly but only allows specific users to access it based on attributes like session cookie, session ID, or group ID. For instance, you can restrict access to users with an admin session cookie and route their requests to the new version with the credit score feature while others continue on the stable version.

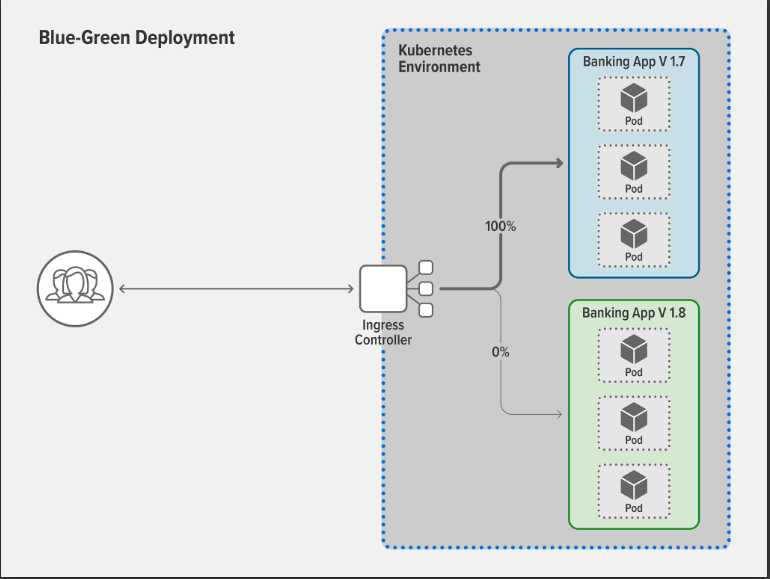

- Blue-green deployments: Assuming that your banking app needs a major version change, traditional upgrades would require downtime for users, as the old version had to be taken down before moving the new version into production. However, with blue-green deployments, downtime can be greatly reduced or eliminated altogether, allowing you to keep the old version in production while deploying the new version simultaneously in the same environment.

Managing Kubernetes in Production with Taikun

managing Kubernetes in production requires careful planning, monitoring, and implementing best practices. From load balancing to traffic control and deployment strategies, many factors must be considered to ensure your Kubernetes infrastructure runs smoothly and reliably.

Use Taikun to simplify this process, a cloud-based application that can help you manage and monitor your Kubernetes infrastructure. Taikun works with all major cloud infrastructures and provides a simple, seamless interface for your Kubernetes setup.

By implementing the right strategies and tools, you can ensure that your Kubernetes infrastructure is optimized for high traffic and is able to handle any challenges that may arise. Take advantage of the available resources to improve your Kubernetes management and take your applications to the next level.