A GitOps Approach to Automating Kubernetes Infrastructure using GitLab CI, Terraform, and Taikun CloudWorks

Introduction

In today's fast-paced DevOps world, managing Kubernetes infrastructure efficiently is essential and challenging at the same time. This blog post shows how to create a fully automated pipeline using GitLab CI, Terraform, and CloudWorks to manage Kubernetes and Virtual clusters (vCluster) and deploy applications like NGINX with just a Git commit.

Why This Matters?

- Infrastructure as Code (IaC): Version-controlled infrastructure changes

- Automation: Reduced human error and faster deployments

- Consistency: Reproducible environments across deployments

- Cost Efficiency: Better resource utilization through virtual clusters

Prerequisites

Before we begin, ensure you have:

- GitLab Account

- Taikun CloudWorks Account

- Basic understanding of:

- Terraform

- Kubernetes

- GitLab CI

- Infrastructure as Code concepts

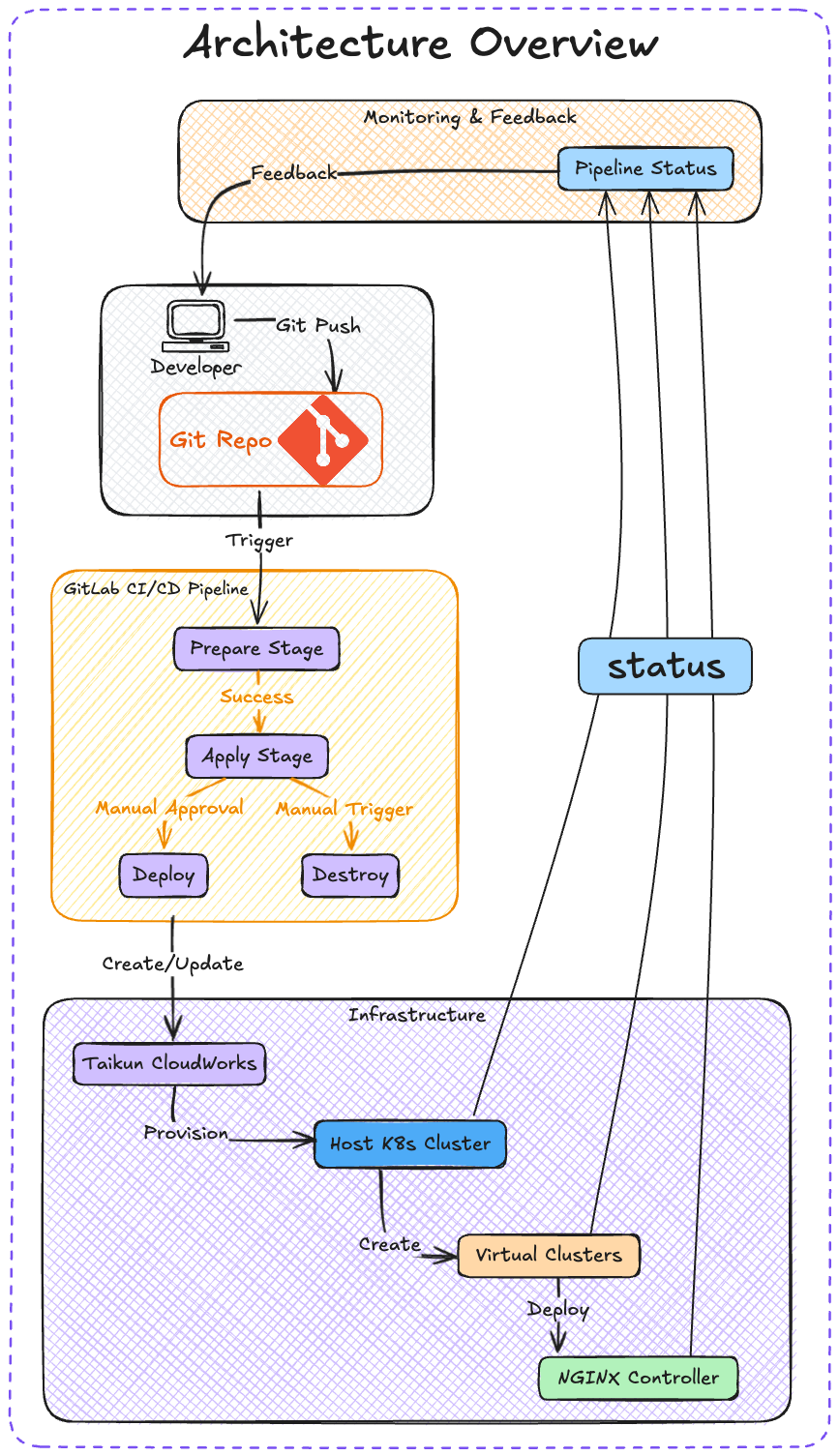

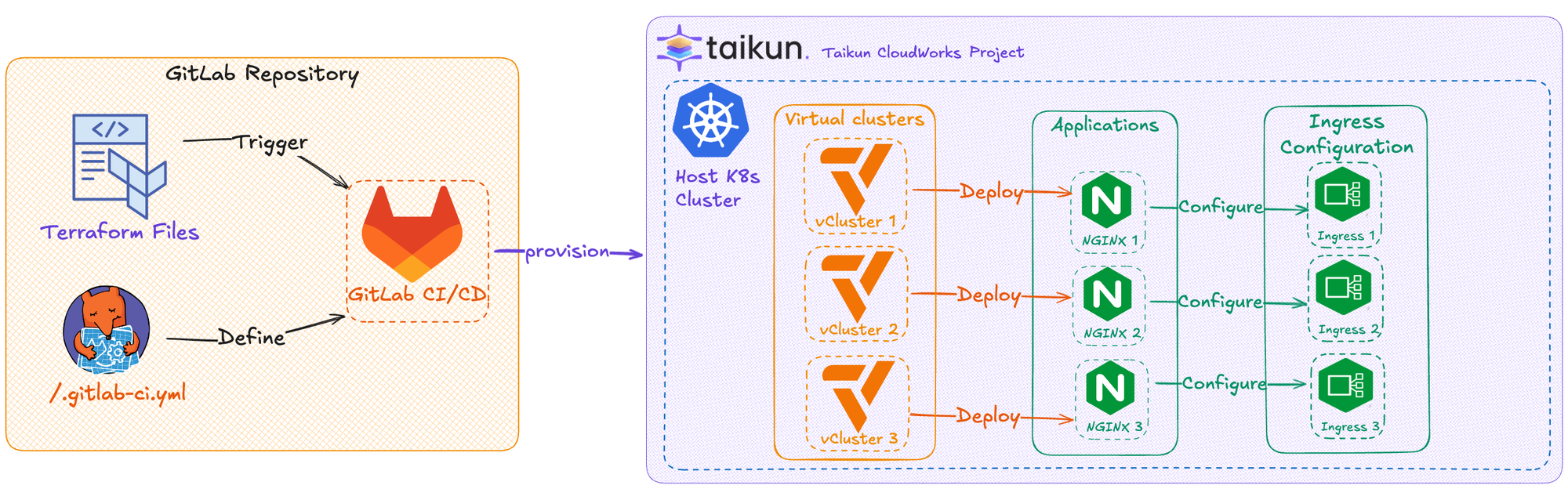

Architecture Overview

Our architecture consists of several key components working together:

1. Infrastructure Components

- Host Kubernetes Cluster

- Virtual Clusters (vCluster)

- NGINX Ingress Controller

- Application Workloads

2. Automation Components

- GitLab Repository (Version Control)

- GitLab CI Pipeline

- Terraform Configurations

- Taikun's Terraform Provider

3. Project Structure

terraform-example/

├── .gitlab-ci.yml # Pipeline definition

├── main.tf # Main Terraform configuration

├── cloud.tf # Cloud provider settings

├── project.tf # Project and cluster configuration

├── variable.tf # Variable definitions

├── profile.tf # Access profiles

└── backup.tf # Backup configurations

GitOps Workflow

Step-by-Step Implementation

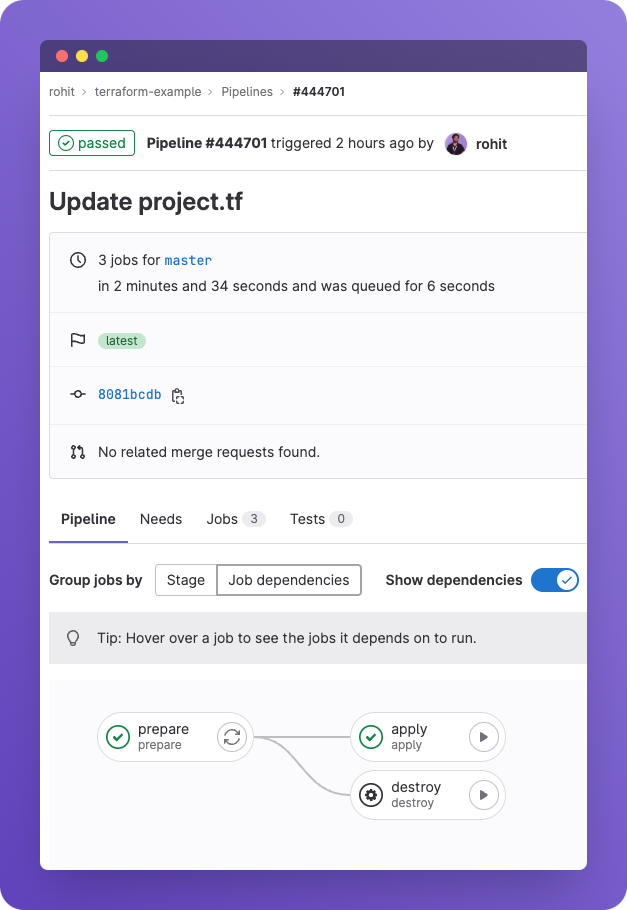

1. Setting Up GitLab CI Pipeline

A .gitlab-ci.yml file is a configuration file placed at the root of a project that defines the stages, jobs, and scripts to be executed during a CI/CD pipeline. In this YAML file, you can specify variables, define dependencies between jobs, and determine when and how each job should be run, enabling you to automate your software development workflow from building and testing to deployment.

Let's define our pipeline stages:

image:

name: hashicorp/terraform:latest

variables:

TF_HTTP_USERNAME: ${GITLAB_USER_EMAIL}

TF_HTTP_ADDRESS: ${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/terraform/state/${CI_COMMIT_REF_NAME}

TF_HTTP_LOCK_ADDRESS: ${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/terraform/state/${CI_COMMIT_REF_NAME}/lock

TF_HTTP_UNLOCK_ADDRESS: ${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/terraform/state/${CI_COMMIT_REF_NAME}/lock

stages:

- prepare

- apply

- destroy

prepare:

stage: prepare

script:

- terraform init

- terraform validate

- terraform plan -out=plan.cache

apply:

stage: apply

script:

- terraform apply -auto-approve

dependencies:

- prepare

when: manual

destroy:

stage: destroy

script:

- terraform destroy -auto-approve

dependencies:

- prepare

when: manual

2. Terraform Configuration

Here, we will define the main configuration for our CloudWorks setup as a code using Taikun's Terraform Provider.

Main Configuration (main.tf)

terraform {

required_providers {

taikun = {

source = "itera-io/taikun"

version = "1.9.1"

}

}

}

provider "taikun" {

email = var.taikun_email

password = var.taikun_password

}

Project Configuration (project.tf)

resource "taikun_cloud_credentials" "cloud" {

name = "demo-credentials"

# Cloud specific configurations

}

resource "taikun_kubernetes_profile" "kube" {

name = "demo-profile"

# Kubernetes configurations

}

resource "taikun_project" "project" {

name = "demo-project"

cloud_credential_id = taikun_cloud_credentials.cloud.id

kubernetes_profile_id = taikun_kubernetes_profile.kube.id

}

3. Virtual Cluster Setup

Virtual clusters provide isolation and better resource management. You can use this module to create as many vClusters as you need:

resource "taikun_virtual_cluster" "vcluster" {

name = "demo-vcluster"

project_id = taikun_project.project.id

delete_on_expiration = false

hostname_generated = "vcluster-${count.index}.cluster.local"

}

Virtual Cluster Benefits

- Resource Efficiency

- Multiple virtual clusters share host cluster resources

- Optimized resource allocation

- Reduced infrastructure costs

- Isolation with Efficiency

- Separate namespaces for different environments

- Independent cluster management

- Shared underlying infrastructure

- Operational Benefits

- Simplified cluster management

- Reduced operational overhead

- Quick provisioning and de-provisioning

4. NGINX Deployment

I'm using the nginx application to show this workflow. Regardless, you can use any applications from our CloudWorks's public application repository or add any application as a helm chart in our catalogue to use it similarly.

resource "taikun_application" "nginx" {

name = "nginx"

project_id = taikun_project.project.id

repository = "taikun-managed-apps"

# NGINX specific configurations

}

Pipeline Execution

When you commit changes to your repository, the GitLab CI pipeline automatically:

- Prepare Stage

- Initializes Terraform

- Validates configurations

- Creates execution plan

- Apply Stage (Manual Trigger)

- Applies Terraform configurations

- Creates/updates infrastructure

- Deploys applications

- Destroy Stage (Manual Trigger)

- Cleans up resources

- Removes clusters and applications

GitOps Workflow Implementation

This setup implements GitOps principles in several key ways:

Git as Single Source of Truth

- All infrastructure configurations are stored in Git

- Infrastructure changes are made through Git commits

- Configuration drift is prevented by automated synchronization

Declarative Infrastructure

- Infrastructure state is defined declaratively in Terraform files

- Kubernetes resources are defined as code

- Virtual clusters and applications are specified in configuration files

Automated Reconciliation

- GitLab CI automatically detects changes in Git

- Pipeline ensures infrastructure matches desired state

- Automated validation and deployment processes

Pull-based Deployment Model

- Changes are pulled from Git repository

- CI/CD pipeline manages deployment automation

- Infrastructure updates follow Git workflow

Benefits of This GitOps Approach

- Version Control

- Complete history of infrastructure changes

- Easy rollback capabilities

- Clear audit trail

- Collaboration

- Code review process for infrastructure changes

- Team visibility into changes

- Standardized workflow

- Security

- No direct access to clusters needed

- Changes are verified through pipeline

- Credentials managed securely in GitLab

- Consistency

- Reproducible deployments

- Environment parity

- Automated validation

Best Practices

You can use Taikun CloudWorks to go beyond by implementing concrete best practices as given below:

1. Security

- Use GitLab CI variables for sensitive data

- Implement proper RBAC

- Regular security audits

- Enable network policies

2. Resource Management

- Implement proper tagging

- Regular cleanup of unused resources

- Monitor resource usage

- Set up cost alerts

3. Pipeline Organization

- Separate environments (dev/staging/prod)

- Include validation steps

- Implement manual approvals for production

- Add proper error handling

Troubleshooting

Avoid such common issues listed below by implementing proper solutions:

- Pipeline Failures

- Check GitLab CI logs

- Verify Terraform state

- Validate credentials

- Resource Creation Issues

- Verify cloud provider quotas

- Check network connectivity

- Validate configurations

- Application Deployment Problems

- Check application logs

- Verify Kubernetes configurations

- Validate network policies

Built-in Enterprise Features

Our GitOps setup includes several enterprise-grade features out of the box:

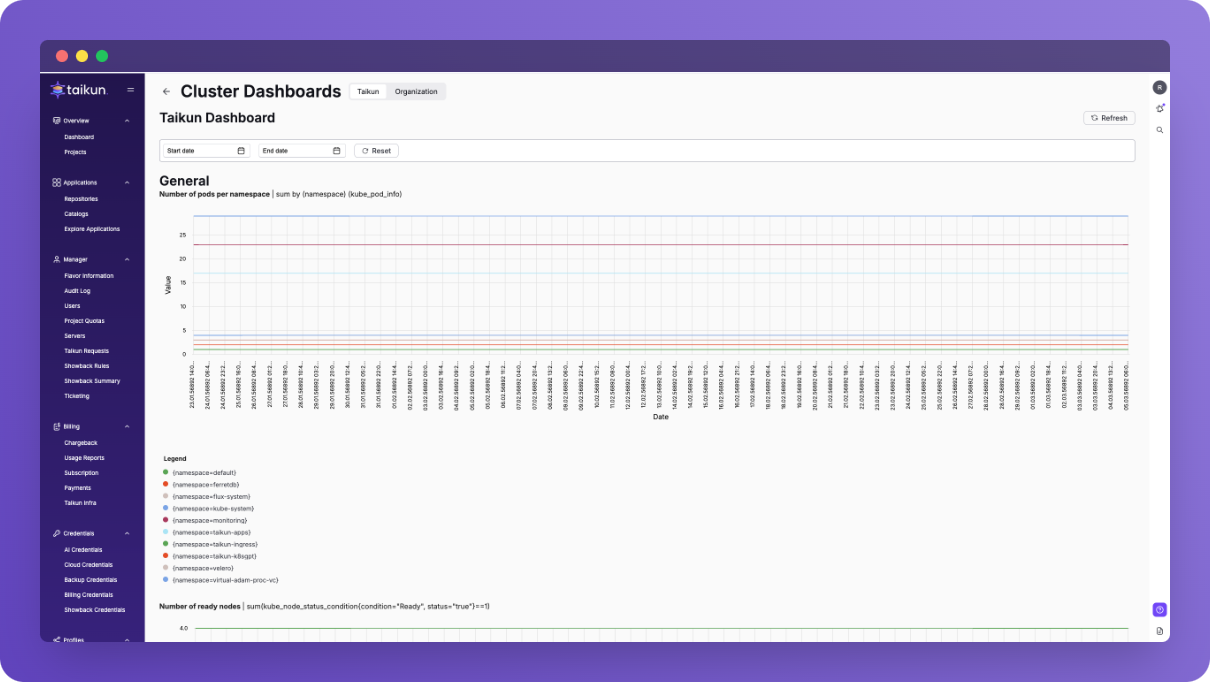

1. Monitoring and Alerting

Taikun CloudWorks provides built-in monitoring and alerting capabilities:

- Real-time cluster health monitoring

- Resource usage tracking

- Performance metrics

- Automated alerts for:

- Resource constraints

- Node failures

- Application issues

- Security concerns

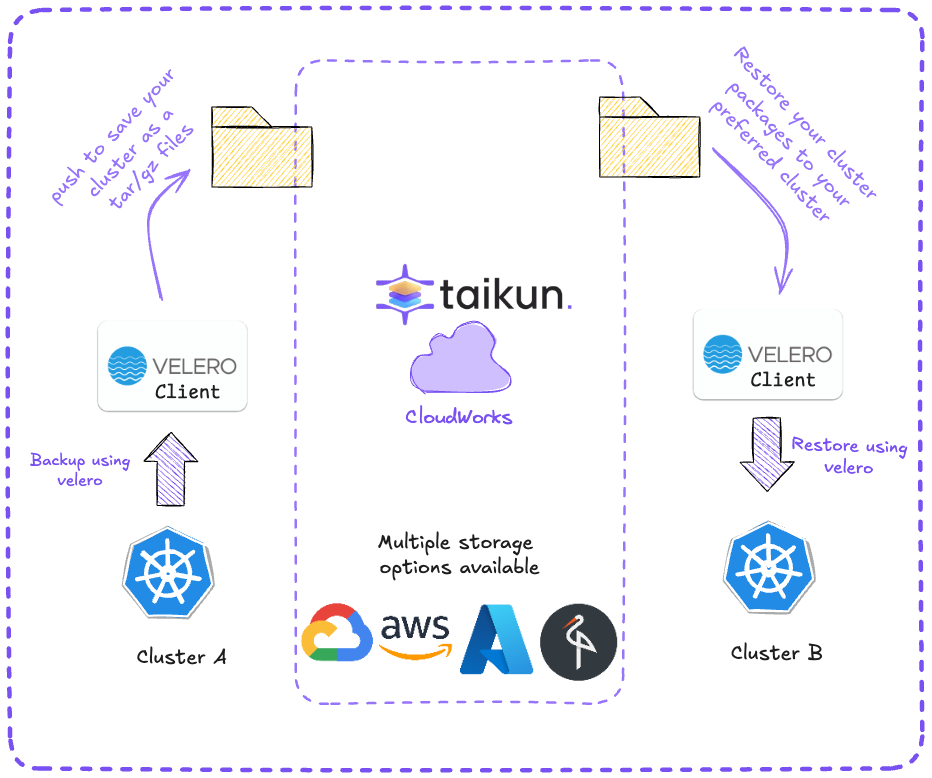

2. Backup Solution with Velero

The setup includes automated backup through Velero, configured in backup.tf:

resource "taikun_backup_credential" "backup" {

name = "backup-tf"

s3_access_key_id = var.backup_user

s3_secret_access_key = var.backup_password

s3_endpoint = var.backup_endpoint

s3_region = var.backup_region

}

This provides:

- Automated cluster backups

- Application state preservation

- Configuration backups

- Point-in-time recovery options

3. Disaster Recovery

Our infrastructure implements disaster recovery through:

- Regular automated backups with Velero

- Multi-cluster architecture

- State preservation in S3-compatible storage

- Quick recovery procedures

4. Cost Optimization

Cost efficiency is achieved through virtual clusters (vcluster):

- Resource sharing between virtual clusters

- Optimized resource utilization

- Reduced infrastructure overhead

- Cost-effective multi-tenant architecture

Monitoring Dashboard

Taikun CloudWorks provides a comprehensive dashboard showing:

- Cluster health metrics

- Resource utilization

- Cost analysis

- Performance indicators

- Alert history

Backup and Recovery Workflow

These enterprise features are automatically configured and managed through our GitOps workflow, providing a production-ready infrastructure setup with minimal manual intervention.

Conclusion

This automated setup provides several benefits:

- Reduced deployment time

- Consistent environments

- Better resource utilization

- Improved security and compliance

The combination of GitLab CI, Terraform, CloudWorks, and vCluster creates a powerful, automated infrastructure management system that allows teams to focus on development while maintaining infrastructure reliability.

For more information, visit:

Taikun CloudWorks is a one-stop GitOps solution for your Kubernetes workloads. Try Taikun CloudWorks today. Book your free demo today, and let our team simplify, enhance, and streamline your infrastructure management.